Today we are going to learn how to scrape a website in python. We will be scraping google to find results and store them as JSON.

Requirements

For web scraping we would require two libraries:

- requests

- bs4

Just install them with pip as:

1

2

3

pip3 install requests bs4

Starting

first we would need to import the required libraries:

1

2

3

4

5

import requests

from bs4 import BeautifulSoup

import json

Suppose we search “foo bar” in google the URL looks like this:

https://www.google.com/search?q=foo+bar

This means that if we want to search a custom search query we just replace all the spaces with + and add it to the end of https://www.google.com/search?q=.

In python this would look like:

1

2

3

4

5

BaseUrl="https://www.google.com/search?q="

SearchTerm="foo+bar"

Url=BaseUrl+SearchTerm

We will use requests to get HTML from this link which looks like:

1

2

3

r=requests.get(Url)

r.content will contain the HTML code.

Searching HTML through BeautifulSoup

We first need to find out which class a single search result belong to, which we can do by clicking inspect element on a result.

from this photo we can see that the title of the search is in a “h3”.So we just need to find all the “h3” elements. Using BeautifulSoup we can do that as:

1

2

3

4

5

soup=BeautifulSoup(r.content,"html.parser")

titles=soup.find_all("h3")

titles=titles[:-1]

The last element is related searches so i truncated it. As we can see from the screen shot the links are just the prvious a tag to h3 so ew can get the links by:

1

2

3

4

5

links=[]

for title in titles:

links.append(title.find_previous("a"))

Storing it as JSON

We can store the information in a python list of dictionaries and output it as JSON. We will get the text from “h3” and the “href” from “a” element.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

ResultsList=[]

ResultDict={}

for title,link in zip(titles,links):

ResultDict={}

# skipping google search results

if link["href"][7:22]=="/www.google.com":

continue

ResultDict["title"]=title.text

# getting the actual URL from "href"

ResultDict["link"]=link["href"][7:].split("&")[0]

ResultsList.append(ResultDict)

print(json.dumps(ResultsList,indent=4))

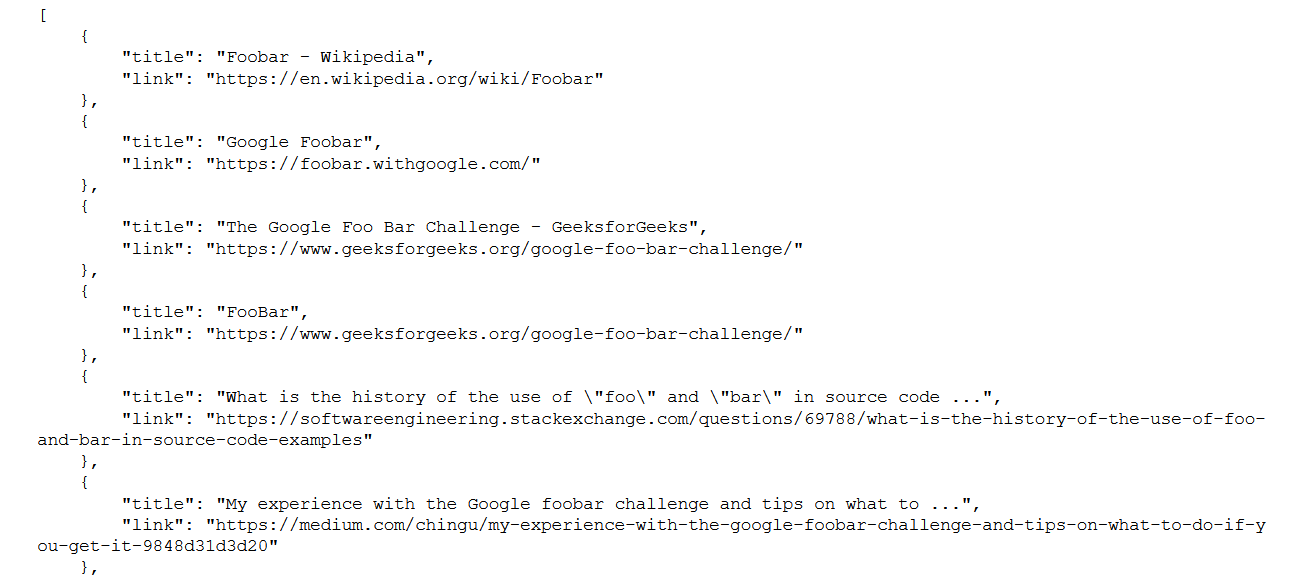

The output looks like:

Entire Code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

import requests

from bs4 import BeautifulSoup

import json

BaseUrl="https://www.google.com/search?q="

SearchTerm="foo+bar"

Url=BaseUrl+SearchTerm

r=requests.get(Url)

soup=BeautifulSoup(r.content,"html.parser")

titles=soup.find_all("h3")

titles=titles[:-1]

links=[]

for title in titles:

links.append(title.find_previous("a"))

ResultsList=[]

ResultDict={}

for title,link in zip(titles,links):

ResultDict={}

# skipping google search results

if link["href"][7:22]=="/www.google.com":

continue

ResultDict["title"]=title.text

# getting the actual URL from "href"

ResultDict["link"]=link["href"][7:].split("&")[0]

ResultsList.append(ResultDict)

print(json.dumps(ResultsList,indent=4))

Conclusion

You can take this one step further by taking the search term as an input from the user. I have only show you a small part of what Beautiful Soup can do, for more information go here. Have fun experimenting with this!